I recently gave a talk at Iowa Code Camp on Working with WebJobs. I got a follow up request for resources on converting an existing console application to a WebJob. The process is relatively simple and involves three steps.

Create an Azure WebApp to host your WebJob.

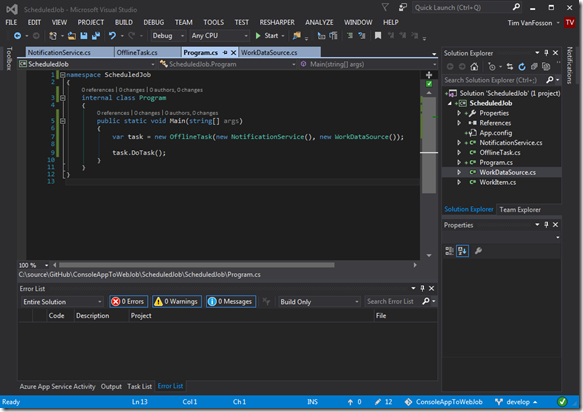

Let’s say you have a solution that contains a console application such as the one shown below. We have a Program class that contains the Main method. This method creates the object that performs the task and its dependencies, then invokes one or more methods on the class to perform the work. You’d probably have some logging and some error handling, but we’ll omit that for the sake of clarity.

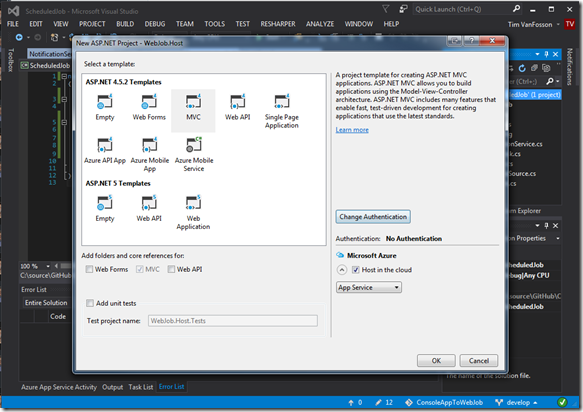

Now, add a WebApp project to the solution. We’ll call it WebJob.Host. I’m creating an MVC project because I want to have a single action that can be used to display the version of the code that’s deployed as a sanity check. If you have an existing site you’re deploying as an Azure WebApp, you could use that instead. It will need to be in the same solution as the project that we will be converting to a WebJob. Remember to update all the packages once you’ve created the project and to clean up any boilerplate that you don’t want. I’m going to get rid of all but the HomeController and Index action. Don’t be surprised if VisualStudio needs to restart to complete updating your packages.

The best way to autogenerate your version information is using the capabilities of your build server. Both TeamCity and AppVeyor support this. If you’re interested in a way to update your Assembly from your repo with version information without using a CI server, I’ve written up a way to do it using MSBuild Community Tasks. I’m going to take the easy way out and manually keep my AssemblyInformationalVersion up-to-date for this project.

Make sure your hosting web application works before adding a WebJob to it.

Convert Your App to A WebJob

First, we need to add the appropriate WebJob Packages. At this point I’ll assume you’re not using any Azure resource or, if you are, you can figure out the additional WebJob packages you’ll need to work with those resources. If you’re using a ServiceBus queue or topic listener, it might be easier to start from scratch and create a WebJob with an appropriate listener, then merge your existing dependencies into that rather than try to convert the older service bus client code over.

For a standard WebJob that is run on a schedule, you’ll need the following package:

Microsoft.Azure.WebJobs

(note: This has several dependencies, which in turn have more dependencies. Don’t be alarmed by this.)

Again, it’s a good practice to make sure you update your dependencies after you add them to your project to make sure you have the latest versions.

This is what my packages.config file looks like after installing only the WebJobs package and it’s dependencies.

<?xml version="1.0" encoding="utf-8"?> <packages> <package id="Microsoft.Azure.KeyVault.Core" version="1.0.0" targetFramework="net452" /> <package id="Microsoft.Azure.WebJobs" version="1.1.2" targetFramework="net452" /> <package id="Microsoft.Azure.WebJobs.Core" version="1.1.2" targetFramework="net452" /> <package id="Microsoft.Data.Edm" version="5.7.0" targetFramework="net452" /> <package id="Microsoft.Data.OData" version="5.7.0" targetFramework="net452" /> <package id="Microsoft.Data.Services.Client" version="5.7.0" targetFramework="net452" /> <package id="Microsoft.WindowsAzure.ConfigurationManager" version="3.2.1" targetFramework="net452" /> <package id="Newtonsoft.Json" version="9.0.1" targetFramework="net452" /> <package id="System.Spatial" version="5.7.0" targetFramework="net452" /> <package id="WindowsAzure.Storage" version="7.1.2" targetFramework="net452" /> </packages>

Now that we have the appropriate packages, we can convert our Program to get the job set up and invoked as a WebJob. I suggest the following approach. Create a class named Functions (this is the convention) and add a method – it can be static or an instance method, whichever fits your needs best. Migrate the code from your Main method to the new method in the Functions class. Decorate the new method with [NoAutomaticTrigger].

For reference here is the Program that I started with.

internal class Program

{

public static void Main(string[] args)

{

var task = new OfflineTask(new NotificationService(), new WorkDataSource());

task.DoTask();

}

}

Here is the Functions class that I created to replicate it's functionality. You'll notice that I'm using manual dependency injection to allow this to be testable. Don't let that throw you. I think it's a good idea, but I could have just copy the contents of Main and pasted them directly into the Execute method. Note also that Execute now has a TextWriter parameter named log. This is the hook into the WebJobs logging facility. For now, we just add log messages that we are starting and finishing. You can continue to use whatever logging you already have in place. If you choose to integrate with the WebJobs logging you can by passing the log parameter to your classes/methods as needed. There are also ways to connect this log facility to an existing one but that's beyond the scope of this article.

internal class Functions

{

private readonly NotificationService _notificationService;

private readonly WorkDataSource _workDataSource;

public Functions()

: this (new NotificationService(), new WorkDataSource())

{

}

public Functions(NotificationService notificationService, WorkDataSource workDataSource)

{

_notificationService = notificationService;

_workDataSource = workDataSource;

}

[NoAutomaticTrigger]

public void Execute(TextWriter log)

{

log.WriteLine("starting job");

var task = new OfflineTask(_notificationService, _workDataSource);

task.DoTask();

log.WriteLine("job completed");

}

}

Now that we have our functions created, we’ll modify the Main method to set up a JobHost and use it to invoke our new Execute method. Before we do that, though, we need to add some configuration values. We need connection strings for the WebJobs dashboard and for WebJobs storage, by convention these are named AzureWebJobsDashboard and AzureWebJobsStorage. I recommend that you store these in a file that is not checked into source control and reference it from your App.Config file.

Create or use an existing storage account. Copy the connection strings from the Azure portal and add them to your connection strings.

<connectionStrings> <add name="AzureWebJobsDashboard" connectionString="your-connnection-string-copied-from-Azure"/> <add name="AzureWebJobsStorage" connectionString="your-connnection-string-copied-from-azure"/> </connectionStrings>

Now, in your Main method, remove the code you copied over to Functions.Execute and insert the code to create a JobHostConfiguration, then a JobHost using that configuration. Then use reflection to get the method or methods that you want to execute when the program is run from your Functions class. For each of these methods, use the Call method on the JobHost to invoke the method. The JobHost knows to add the TextWriter to the parameters when calling the method. You can also supply a custom activator to the JobHostConfiguration so that you can hook into your favorite dependency injection framework if you want. There’s an example of this in my WebJobs talk demonstration code.

Here’s the updated Program code after changing it to invoke the Function as a WebJob.

internal class Program

{

public static void Main(string[] args)

{

var config = new JobHostConfiguration();

var host = new JobHost(config);

var tasks = typeof(Functions).GetMethods()

.Where(m => m.GetCustomAttributes(typeof(NoAutomaticTriggerAttribute), false).Any());

foreach (var method in tasks)

{

host.Call(method);

}

}

}

Lastly, to get the job to run on a schedule, add a settings.json file as a Content item (I use Copy Always) to the project specifying when the job should run. This is used by the scheduler in Kudu, the underlying framework that manages WebJobs. It has a relatively simple format. The only property we are going to set is the "schedule" - that's always been enough for me. The "schedule" property is a cron-like entry ({second} {minute} {hour} {day} {month} {day of the week}) specifying (in UTC) when to run the program. The following example runs the WebJob at 5AM UTC every day.

{ "schedule" : "0 0 5 * * *" }

Add the WebJob to the WebApp

Now that we have both the Web App and the WebJob set up, we just need to add the WebJob to the WebApp so that when we publish the WebApp, the WebJob is published as well and will run on its schedule.

Right-click on the host project, I named it WebJob.Host, then choose Add, then choose Add Existing Project as Azure WebJob.

This brings up a configuration Wizard. Choose the project you want to add, give it the name you want to see in the Azure console – there are some name restrictions, for example you can’t use dots in the WebJob name. Set the run mode to OnDemand – our schedule is included in the settings.json file so we don’t need to set a schedule here. Click OK to add the WebJob to the WebApp.

This will install the WebJobs publishing package. It adds a webjobs-list.json file in the Properties folder of your Web App. This lists the jobs that get published with the Web App and their relative location in the project. It also adds a webjob-publish-settings.json file to your WebJob project, again in the Properties folder. You might see some JSON validation errors in this file. I haven’t found these to cause problems when deployed, but I generally clean up the unused properties to remove the errors.

Now, we’re essentially done. Verify your configuration values and publish your host WebApp. Check, using the Azure portal – see the WebJob pane in settings – to make sure your job was deployed and will be run on the schedule that you’ve chosen. Use the Logs to view the log messages written to the TextWriter. You can also access Kudu directly via the Tools pane on the Web App to dig deeper to test and debug your Web Job.

Code used for this post can be found on GitHub. Note that both the original console app and the converted app (now WebJob) are included in the code so you can compare the before and after conversion states. You would probably only have the single project, converted in place, in your solution.